Meta AI Develops a More Compact Language Model for Mobile Devices

Meta AI researchers have announced the development of MobileLLM, a compact language model designed specifically for mobile devices.

Recently, smartphone manufacturers have been heavily investing in integrating artificial intelligence features into their devices. In line with this trend, Meta is developing a more compact language model for devices with limited capacity, such as mobile phones and tablets. This new model signifies a shift in the dimensions of efficient artificial intelligence.

The collaboration between the Meta Reality Labs team, PyTorch, and Meta AI Research (FAIR) has resulted in a model with less than 1 billion parameters, making it a thousandth the size of larger models like GPT-4, which boasts over 1 trillion parameters.

Artificial intelligence is shrinking!

Yann LeCun, Meta’s chief artificial intelligence scientist, shared details of the research in a post on X. The language model, called MobileLLM, is set to bring artificial intelligence to smartphones and other devices.

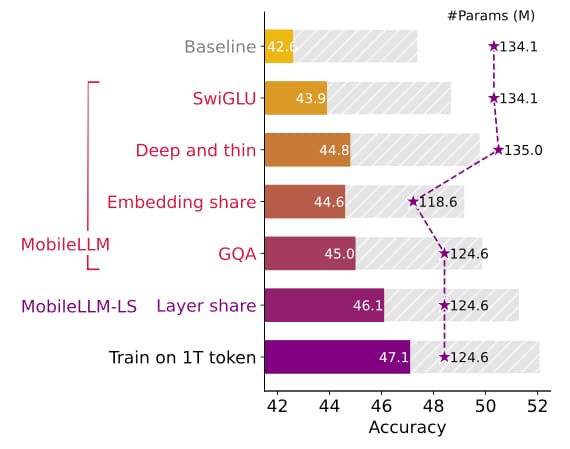

According to the study, smaller models can enhance performance by prioritizing depth over width. LeCun also mentioned that they increased efficiency on devices with storage constraints by employing advanced weight-sharing techniques. These techniques included embedded sharing, grouped queue queries, and block weight sharing.

Another noteworthy point in the research is that MobileLLM, with only 350 million parameters, performed on par with the 7 billion parameter LLaMA-2 model.